Wearables in Neurodegenerative Diseases Detection

Date:

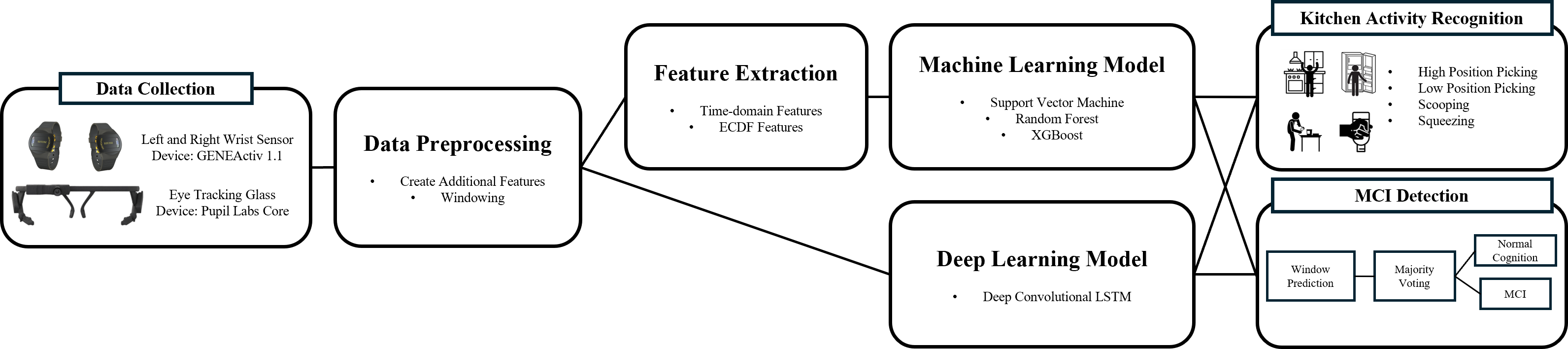

While prior research on wearable-based behavioral sensing for Mild Cognitive Impairment (MCI) has primarily focused on analyzing walking patterns in controlled environments, recent efforts in human activity recognition have turned toward quantifying kitchen activities—an instrumental activity of daily living—due to the impact of visuospatial deficits in MCI on functional independence during such tasks. This study investigates the use of wrist and eye-tracking wearable sensors to quantify kitchen activities in individuals with MCI. We collected multimodal datasets from 19 older adults (11 with MCI and 8 with normal cognition) while preparing a yogurt bowl. Our multimodal analysis model could classify older adults with MCI from normal cognition with a 74% F1 score. The feature importance analysis showed the association of weaker upper limb motor function and delayed eye movements with cognitive decline, consistent with previous findings in MCI research. This pilot study demonstrates the feasibility of monitoring behavior markers of MCI in daily living settings, which calls for further studies with larger-scale validation in individuals’ home environments.